[Updated 2018-04-16] I was corrected on Twitter about how Event (async) invocation throttling behaves, and have updated this post accordingly in several places marked like this one. Thanks to Tim Wagner for setting me straight!

I've been helping out on a project recently where we're doing a number of integrations with third-party services. The integration platform is built on AWS Lambda and the Serverless framework.

Aside from the data hygiene questions that you might expect in an integration project like this, one of the first things we've run into is a fundamental constraint in productionizing Lambda-based systems. As of today, AWS Lambda has the following limits (among others):

- max of 1000 concurrent executions per region (a soft limit that can be increased), and

- max duration of 5 minutes for a single execution

Imagine, then, that you have the following Lambda in Python:

def start(event, context):

employees = _fetch_all_employees(event['organization'])

for employee in employees:

_invoke_next_lambda(employee)

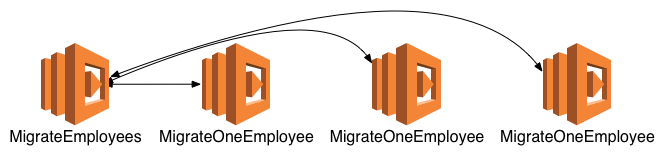

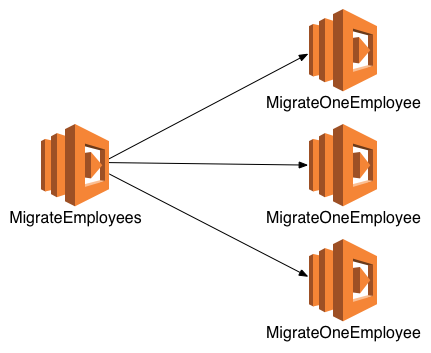

So this top-level Lambda fetches employee data and acts as a sort of a Splitter in order to process each employee in the next step. This means that for every incoming message to this Lambda function, many Lambda functions will be invoked. When algorithms have this kind of behavior, we say that they take "polynomial" time to complete. The "polynomial" here is O(N) = (N * M) where N is the number of inputs, and M is the number of steps.

Now let's consider the following options for invoking these Lambda functions:

-

Invoke the next Lambda using an

InvocationTypeofRequestResponse(the default, which is synchronous). -

Invoke the next Lambda using an

InvocationTypeofEvent(which is asynchronous).

Let's take it for granted that we don't want to use the remaining InvocationType (it's called DryRun—you can probably guess why)!

Both of these options have costs and benefits.

By using the synchronous (RequestResponse) invocation, we could ensure in-order processing and avoid the risk of hitting the concurrent executions limit. But we would then risk exceeding the execution duration limit, due to the executions taking polynomial time.

Similar deal if we just decided to inline that Lambda's work into the top-level Lambda.

With the asynchronous (Event) invocation, on the other hand, we can avoid the risk of hitting the execution duration limit. But with this option, we'd run the risk of having some executions throttled due to the concurrent executions limit. Even if we requested AWS to bump the concurrency limit up, there would still be some limit and we'd need to manage that concurrency somehow. Incidentally, even if there was no concurrency limit directly in AWS, it's likely that whatever work we're doing (e.g. connecting to database or a third-party API) will have inherent concurrency limits as well.

[Updated 2018-04-16] Throttling isn't such a big deal in an isolated case like the one I've described, since Lambda enqueues Event invocations and will retry for six hours. However, since the concurrency limit is account-wide, retries around these asynchronous invocations can cause unexpected throttling failures in unrelated synchronous invocations. Function-level concurrency limits are a way forward, which I'll describe below.

If there's not much work to be done (e.g. in a development / testing environment without much data), things might work just fine with either of these options. And at the edges of the limits, we could use manual retries, the Lambda retry policy, or Dead Letter Queues as safety nets that allow the right work to get done.

But as soon as we start to introduce more realistic data into the system, we'll find that retries aren't a complete solution. If it's possible, I'd rather not rely on retries for the "happy path", when we can already foresee the problems. Systems fail often enough for reasons we can't foresee, so let's save retries and Dead Letter Queues for the more unexpected issues.

Working within the Constraints

So where do we go when we're stuck between the time budget on the one hand, and the concurrency budget on the other?

One approach is to process work in batches, replacing the MigrateOneEmployee Lambda with something like a MigrateEmployeeBatch which might process 10 records at a time, and invoking Lambdas in the asynchronous Event style. From an algorithmic perspective, this is only reducing the concurrency by a constant factor—large numbers of employees could face the same issue. But in practice, a 10x reduction in concurrent executions may be plenty, particularly if the number of employees has a known upper bound!

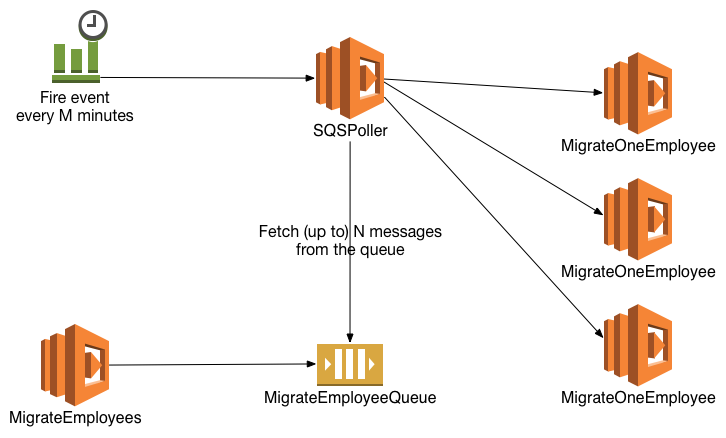

An alternative approach that's been working well for us has been to decouple the Lambdas with another mechanism: a queue.

We can solve any problem by introducing an extra level of indirection. 1

Inserting a queue between these two processes avoids both of the above constraints. First, like the asynchronous Lambda invocation, it avoids blocking the top-level Lambda while waiting for the child Lambda to complete. And secondly, like the synchronous Lambda invocation, it allows us to limit the number of concurrent Lambdas associated with this process. Using a queue here might seem like an obvious choice to folks who have been around awhile, but until you've seen these kinds of constraints, queues might seem like an unnecessary bit of indirection.

But what does this look like in practice? There are a bunch of options for queue-like processing in AWS: SQS, Kinesis Data Streams, and DynamoDB Streams are all reasonable options to consider, in addition to spinning up plain old EC2 instances with any messaging service we want (e.g. RabbitMQ, ActiveMQ, etc.) For our first iteration, we've settled on SQS, for a couple of reasons: Compared to the EC2 instance approach, SQS handles scalability for us. And compared to Kinesis Data Streams and DynamoDB Streams, SQS has the queue own the state of whether various events/messages have been processed yet. This makes scaling up more queue consumers (a la the Competing Consumers pattern) straightforward, along with avoiding consumer coordination.

[Updated 2018-04-16] I've recently learned that by introducing function-level concurrency limits on the MigrateOneEmployee Lambda, and using the Event invocation type. This gets us the benefits of a queue, without having to manage additional services or infrastructure. SQS does give us a bit more observability, more time to process the work (4 days by default, up to 14 - vs. 6 hours in the hidden Lambda queue), and more potential for adding additional workers (which could live outside of Lambda's concurrency limits) to process load more quickly if the queue gets deep. But if we had known about this combination of function-level concurrency limits and Event throttling behavior, we likely would have chosen this route instead for our use case.

So an approach like this one solves for both of the primary Lambda constraints (execution duration and concurrency level):

In the example design above, we're firing a Lambda every M minutes (minimum of one minute) to grab work from the queue, using CloudWatch Events. Depending on the pattern of work and application needs, this could be wasteful or useful. If it's rare for there to be work in the queue, it might be more cost-efficient to fire up this polling Lambda whenever the number of available messages in the queue (a CloudWatch SQS metric called ApproximateNumberOfMessagesVisible) is above zero. However, that change comes with a bit of extra processing latency: SQS metrics like ApproximateNumberOfMessagesVisible only gets sent every five minutes, which means messages could sit in the queue at least four minutes longer under this approach. So if latency is important, an every-minute poller works a bit better.

Besides that, we're doing something a little unsatisfying in that we introduce a new Lambda purely for operational needs. More critically, we are left with a conundrum in that to safely delete messages from the queue, we'd like to ensure the work is completed. And the work doesn't get completed until MigrateOneEmployee has done its job. So if we want to ensure all messages are eventually processed (one of the benefits of using SQS in the first place), we're forced to either spread SQS knowledge across both the SQSPoller (which picks up a message) and the MigrateOneEmployee Lambda (which removes it), use RequestResponse Lambda invocation, or combine these two Lambdas into one.

There are plenty of other caveats and gotchas here, as with many asynchronous systems, including ensuring that the SQS visibility timeout is less than the total processing duration.

It would be nice if SQS could instead notify us when there are messages for us in the queue, and automatically farm out work to Lambdas, without this extra CloudWatch / SQS-polling infrastructure. The good news is that on April 4, 2018, AWS announced upcoming support for SQS events as direct Lambda triggers, rather than having to rely on CloudWatch events. I'll be excited to see that functionality roll out and hopefully eliminate our need for this extra Lambda, along with solving the reliability issue described above.

[Updated 2018-04-16] The most "official" link I've seen for the AWS Lambda - SQS integration announcement: https://twitter.com/timallenwagner/status/985269102129197056

There are plenty of alternatives to the approach here. For example, in heavy production usage, many folks use EC2 instances as their queue consumers, with Auto Scaling based on the ApproximateNumberOfMessagesVisible CloudWatch metric. This can work well and comes with all the additional configurability and runtime options of EC2. It makes the most sense for scenarios where EC2 instances are going to be doing the work, unlike our poller which simply farms work out to Lambda functions.

We also didn't work on optimizing the MigrateOneEmployee Lambda executions themselves. Reducing the runtime there would have immediate benefits. In our case, these are mostly IO-bound functions, reliant on the performance of potentially-slow external APIs to do their work, so batching that IO up could help. If they were CPU or memory-bound, we might increase the memory allocated to the Lambda (which would also increase the available CPU proportionally).

So far, the approach outlined above works well for this team, and we are excited to see how it develops! These execution duration and concurrency limits are by no means the only interesting constraints that a Serverless architecture presents. For example, Lambda currently limits the data passed in Event invocations to 128 KB, and RequestResponse invocations to 6 MB. SQS, in turns, limits a message's size to 256 KB. That's a story for another day, but if you're intrigued and want to dig deeper, have a look at the Claim Check pattern from Enterprise Integration Patterns!