"I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes."

Joanna Maciejewska

I'm sure you've seen this quote from Joanna Maciejewska all over social media; I'd bet some money that there are shops already selling t-shirts and mugs with it. Despite seeing it dozens of times, I never thought about it in the context of my day-to-day as a programmer! I, like the quote's author, was more focused on AI hijacking the "classical" creative work such as painting and writing, instead of helping us humans with the most boring tasks.

But it's been almost a year since I've started learning more about AI and LLM, applying them to projects, and using assisted coding. And I've gained a new perspective on this quote, this time within the tech world. And it isn’t all sunshine and rainbows.

I had hoped AI would take over my tech “dishes and laundry” — the most frustrating and mundane tasks — freeing me up to focus on the more exciting parts of my job. But ironically, it has consistently fallen short in exactly those areas. In this post, I’ll reflect on how AI struggles with two chores: the frustrating complexity of project setup and the dull grind of manual data handling.

The Struggle of Setup

Someone once asked me, “If there was one thing in software you could magically skip, what would it be?” My answer was immediate: “setup.” It’s rarely fun, always takes longer than expected, and involves wrangling together tooling that shifts from project to project. We’re constantly tempted to try out the new, shiny thing, only to discover a new set of issues when trying to make everything run on multiple environments or deploy.

Thankfully, the software community built great instruments to ease this pain, such as Phoenix, Ruby on Rails, Docker, create-react-app, or even version checker for React Native. These tools are lifesavers. I’d probably have gone mad without them. Sincere thanks to their contributors.

And then came AI-assisted coding and vibe coding with the temptation and promise to do it even faster, even easier than before.

> “Let AI handle the boring setup so you can jump into the fun stuff”

I've tried that. I've used Cursor and ChatGPT to generate project setups, and not once have they worked without issues.* Even with detailed, structured prompts explaining the exact stack and requirements, the AI would often return setups that either didn’t run properly or ran but were short-sighted, missing critical details for scalability, 3PS integrations, long-term maintenance, or production-readiness. The time I’ve used going back-and-forth with AI was far longer than it would have taken me to set it up by hand, at least in a known stack.

*To be clear, I’m talking about fully-fledged projects, with environments, databases, third-party integrations, authentication, and the rest. Sure, AI can spin up a simple React app locally without breaking a sweat.

The Treacherous “Wow, it’s Working!” Trap

What's worse than an AI-generated project that doesn’t run? One that runs perfectly on the first try!

When the setup works on one of the initial tries, it’s tempting to assume everything “is just fine.” But that illusion can be dangerous, masking critical issues you’d normally catch through the trial-and-error of debugging and deeper exploration.

It’s easy to feel a sense of accomplishment — after all, if the system runs quickly, your prompting skills must be on point. With the setup complete, the excitement builds, and it’s tempting to jump straight into feature development. There’s something about the confident, articulate responses from AI agents that contributes to this sense of euphoria. Their clarity can be deceptively reassuring, making it all too easy to assume everything is working as intended. I, personally, must constantly remind myself that AI agents are just fancy word-guessers and that I have to verify everything they produce.

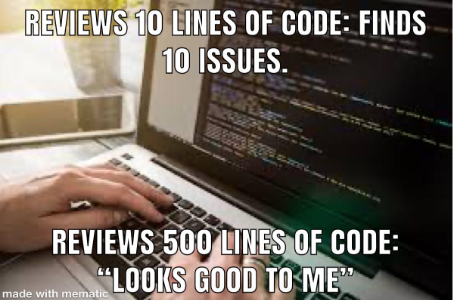

The Advice to “Review AI’s Code as if it Was a Junior Dev’s” is Not Working Out in Practice

Speaking of verifying the AI-generated code — review remains the most effective defense against mistakes and omissions. I often see it phrased as “you should review AI’s code as if it has been written by an intern/junior.” At first glance, this seems perfectly reasonable — it encourages close, critical scrutiny. But on closer inspection, it overlooks a key point: there are many differences between reviewing human coding and AI coding that this advice doesn’t seem to account for.

Firstly, when a junior developer submits a pull request, it typically includes a few files and changes that are scoped to a single task.

In a non-AI world, submitting PRs with many hundreds of lines of code to review is a bad practice because the reviewer won’t pick up on all issues due to its size. However, in AI-assisted coding, an expectation to review hundreds of lines produced within seconds, dozens of times a day, becomes a norm.

The Review and Ownership Shift When Incorporating AI-assisted Coding

It takes practice to shift your mindset and spend hundreds — or even thousands — of times longer reviewing AI-generated code than the AI took to produce it. It’s a lesson in discipline and patience, especially when implementing the next feature / requirement is a click away.

In addition to that, reviewing code pre-AI rarely meant that you had to run it. Usually developers just read the code, looking for errors, adherence to design patterns and project standards, and checking if the story requirements were met. With AI, the review actually means running it, checking it from the front to back and every direction in between to make sure something didn’t get lost in the rapidly added 1000 lines. It’s not just a standard review process anymore.

In a standard review process, there is an element of trust between the parties which cannot be said about AI. Companies, when hiring developers, often ask them about their working and review style, making sure that the devs pass the “culture fit.” But then the devs end up working with AI, not the other developers, and that whole trust and feeling that we’re all working together under the same cultural north star is gone.

Ultimately, the advice to “treat AI like a junior dev” at a time when juniors struggle to find work and grow into seniors is hurtful, especially when many programmers end up training AI when we could have provided training to our trusted peers instead.

Modularize — Use AI in a Limited or Composable Scope

According to this advice, you should not ask AI to build the entire setup, but rather use AI to help with individual parts. I believe this to be very good advice, and it can be an efficient way to work with AI on the setup. It will decrease the number of lines AI produces and the number of files changed at one time, which will then make the review process more efficient. What you then need to be aware of is that the integrated AI support, such as Cursor, might change other files even if not prompted to do so, so you need to pay attention to it at all times.

With the modular approach, AI often proves as a useful tool, but the main burden still remains on the human to decide what the elements on the setup will be and how they should be connected. This means that the humans are still doing the setup “dishes,” but instead of consulting with other humans on the chunks of the system, they can now use AI.

A Word of Advice

If you're evaluating AI tools for engineering velocity, don't assume "faster setup" = "better setup." Don’t get carried away with success if the devs tell you that the POC has been setup in 30 minutes with Cursor.

AI might help you spin up the project faster, but the hidden cost of poor foundations grows rapidly. Setup demands a kind of foresight, precision, and architectural judgment that AI just doesn’t have. Not yet, anyway. You’re thinking about deployment, cross-platform compatibility, and long-term maintenance. Every decision and line matters. For now, I don’t believe this is a task AI is ready to own, so I’ll keep doing most of the “dishes,” delegating only a very limited scope of the foundational work to AI.

Now onto the second “chore” AI struggles with — the “laundry” of programming.

The Potential for Back-Office Work

AI might excel at creative tasks, but it still isn’t reliable enough to handle precise, mundane work without significant developer orchestration.

In the tech world, AI assistants shine brightest when extending or refactoring existing systems. Give them some wiggle room, a bit of “creative space,” and they’ll often produce surprisingly decent results. Building a locally-running POC that you will bin and do properly after the demo? Yes please, AI is the way!

But if you're aiming to build something precise, reliable, and production-ready, like the good ol’ code with 100% test coverage, just with a touch of “smart,” then it's a different story. Total adherence to rules, minimal room for interpretation, and no hallucinations is where things get tricky. It’s not impossible, but it’s far from effortless.

Let’s set aside project setup for a moment and look at another area where I think AI has real potential: back-office work. By that, I mean handling repetitive, mundane, manual tasks like parsing, tagging, and structuring content. Imagine a university program database where each program is stored as a massive blob of unstructured text including duration, price, benefits, and application criteria. Running that through an LLM to extract structured data would be a game changer.

But if you're doing this for a production system, you can’t afford AI's “creativity” leading to mistakes. The kind of thing that’s obvious to a human — "follow the template, stick to the request" — is surprisingly hard for an LLM to get right consistently.

So how do we make AI reliably handle these kinds of tasks? It takes a lot of work.

Some of the solutions are AI-specific: setting the right prompt temperature, using multi-shot prompts, or fine-tuning. Others are classic engineering practices: improving data structure (no repetition or redundancy, unambiguous field names, consistent formatting), cleaning the input to reduce noise (remove data that we know won’t be useful for the model for the given task), and isolating a tightly-scoped problem for the model to solve. Because the simpler and cleaner the problem, the better the chance AI will handle it flawlessly.

But hey, notice what’s happening here — a lot of dev effort is going into making the task easy for AI. That’s a bit ironic. We’re doing all this upfront work just so AI can take on a job that, on paper, was supposed to be “simple.” And yet, if we want precision and reliability, this scaffolding is essential. But at least once it’s done it means that our “laundry” is in AI’s hands and we might have one less chore on our list.

Final Remarks

I hoped AI would free us from the tedious parts of our jobs so we could focus on the more fulfilling, creative work. But it currently feels like the opposite: it excels at improvisation, but fails, or at the very least requires constant hand-holding, when it comes to precision, structure, and long-term maintainability. There's a distinct lack of durability in what is being generated, at least with the current AI regime.

We’re not yet in a place where AI can truly take care of our “dishes and laundry” — not without significant oversight, setup, and scaffolding from developers. And that’s okay. We’re still early, and the tools are evolving quickly. But for now, it looks like I’ll keep doing most of the annoying chores myself.

If you’re interested in learning how 8th Light can help your organization implement best-in-class platforms that drive your business outcomes, with or without AI, send us a note and we’ll uncover what we can do together.

P.S. This post is organic, no AI was used to write it.